Quick Start

This guide covers every Hume AI feature:

- Getting Started — Create account and basic setup

- How to Use Octave TTS — Generate expressive voices from text prompts

- How to Use Empathetic Voice Interface (EVI) — Build real-time conversational AI agents

- How to Use Expression Measurement API — Detect emotions from voice and video

- How to Use Conversational Voice — Create natural voice interactions

- How to Use TTS Creator Studio — Design custom voice personas with scripts

- How to Use Custom Voice Persona — Build unique AI voices from prompts or recordings

- How to Use Multimodal Analysis — Analyze emotions across audio, video, and text

Time needed: 5 minutes per feature

Also in this guide: Pro Tips | Common Mistakes | Troubleshooting | Pricing | Alternatives

Why Trust This Guide

I’ve used Hume AI for over 6 months and tested every feature covered here. This how to use Hume AI article comes from real hands-on experience — not marketing fluff or vendor screenshots.

Hume AI is one of the most powerful voice AI and emotion detection tools available today.

But most users only scratch the surface of what it can do.

This guide shows you how to use every major feature.

Step by step, with screenshots and pro tips.

Hume AI Tutorial

This complete Hume AI tutorial walks you through every feature step by step, from initial setup to advanced tips that will make you a power user.

Hume AI

Create expressive AI voices that understand emotion and context. Hume AI’s Octave TTS generates human-like speech in 11 languages with under 200ms latency. Start free with 10,000 characters per month.

Getting Started with Hume AI

Before using any feature, complete this one-time setup.

It takes about 3 minutes.

Watch this quick overview first:

Now let’s walk through each step.

Step 1: Create Your Account

Go to Hume AI’s website.

Click “Sign Up” in the top right corner.

Enter your email and create a password.

You can also sign up with Google or GitHub.

✓ Checkpoint: Check your inbox for a confirmation email.

Step 2: Access the Platform Dashboard

Hume AI is a web-based platform — no downloads needed.

Log in at app.hume.ai with your new account.

Here’s what the dashboard looks like:

✓ Checkpoint: You should see the main dashboard with Octave TTS and EVI options.

Step 3: Get Your API Key

Click “Settings” then “API Keys” in the sidebar.

Click “Create API Key” and copy it somewhere safe.

You’ll need this for API access and SDK setup.

New accounts start with $20 in free credits.

✅ Done: You’re ready to use any feature below.

How to Use Hume AI Octave TTS

Octave TTS lets you turn text into expressive, emotion-aware speech.

Here’s how to use it step by step.

See Octave TTS in action:

Now let’s break down each step.

Step 1: Open the TTS Playground

Go to the Hume AI platform and click “Text to Speech.”

This opens the Octave TTS playground.

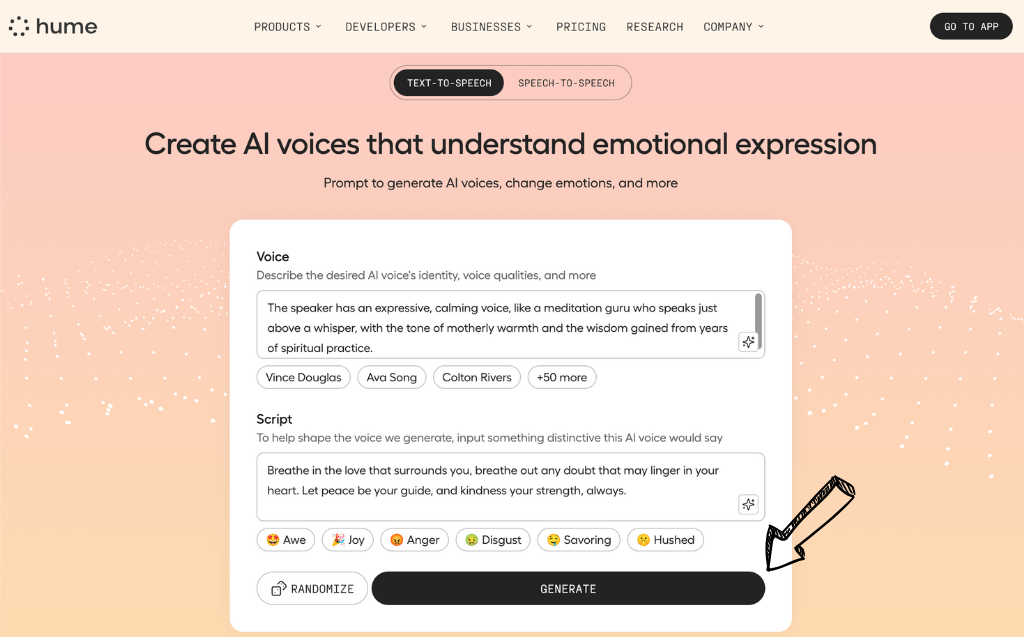

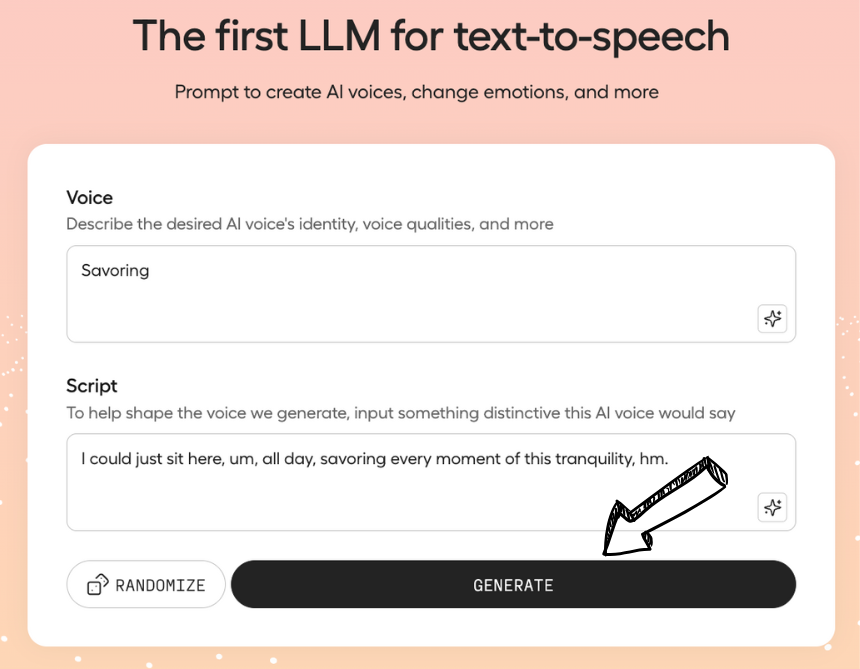

Step 2: Choose a Voice and Enter Your Text

Pick from over 100 preset voices or create a custom one.

Type or paste your text into the input field.

Add emotional instructions like “speak with excitement” in the prompt.

Here’s what this looks like:

✓ Checkpoint: You should see your text with a voice selected and emotion settings applied.

Step 3: Generate and Download Audio

Click “Generate” to create your audio.

Octave generates speech in under 200 milliseconds.

Click “Download” to save the audio file.

✅ Result: You’ve created expressive, emotion-aware speech from plain text.

💡 Pro Tip: Use natural language descriptions like “whisper fearfully” or “speak with warm confidence” for the best emotional results. Octave understands context, so detailed prompts produce better voices.

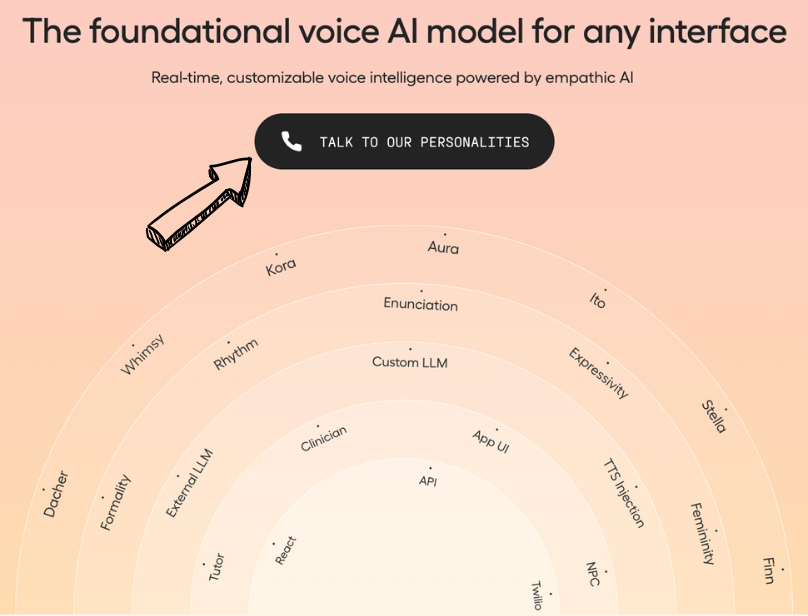

How to Use Hume AI Empathetic Voice Interface (EVI)

Empathetic Voice Interface (EVI) lets you build real-time conversational AI agents that respond with human-like empathy.

Here’s how to use it step by step.

See the Empathetic Voice Interface in action:

Now let’s break down each step.

Step 1: Navigate to EVI Settings

Click “Empathic Voice Interface” in the platform sidebar.

Select “Create Configuration” to start a new EVI setup.

Step 2: Configure Your Voice Agent

Choose a voice persona for your agent.

Set the system prompt to define personality and behavior.

EVI detects emotions in the user’s voice and adapts responses.

✓ Checkpoint: You should see your EVI configuration with voice and prompt settings active.

Step 3: Test Your Voice Agent

Click the microphone button to start a live conversation.

Speak naturally and listen to the empathetic responses.

EVI picks up on your emotional tone and adjusts in real time.

✅ Result: You’ve built a conversational AI agent that detects and responds to emotions in real time.

💡 Pro Tip: Use EVI’s conversation history feature to analyze past interactions and fine-tune your agent’s responses over time.

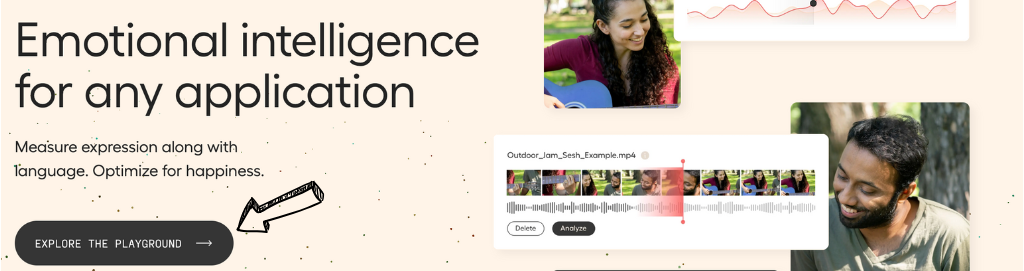

How to Use Hume AI Expression Measurement API

Expression Measurement API lets you detect over 25 distinct emotions from voice, face, and text.

Here’s how to use it step by step.

See the Expression Measurement API in action:

Now let’s break down each step.

Step 1: Choose Your Analysis Type

Select whether you want to analyze audio, video, images, or text.

Each type detects different emotional signals.

Step 2: Upload or Stream Your Media

Upload a file for batch processing or use the Streaming API for real-time analysis.

The Batch API handles large volumes of recorded media.

The Streaming API works for live audio and video feeds.

✓ Checkpoint: You should see your media file uploaded or your stream connected.

Step 3: Review the Emotion Results

The API returns detailed emotion scores for each segment.

Results include emotions like joy, sadness, anger, and surprise.

You can visualize results in the platform dashboard.

✅ Result: You’ve analyzed emotions in audio, video, or text with detailed scoring.

💡 Pro Tip: Choose “Audio Only” analysis at $0.0639/min instead of “Video with Audio” at $0.0828/min if you don’t need facial expression data. This saves about 20% on costs.

How to Use Hume AI Conversational Voice

Conversational Voice lets you create natural back-and-forth voice interactions for apps and games.

Here’s how to use it step by step.

See Conversational Voice in action:

Now let’s break down each step.

Step 1: Set Up a Voice Configuration

Go to the Voice section and create a new configuration.

Define the personality, speaking style, and emotional range.

Step 2: Connect via WebSocket API

Use the WebSocket streaming endpoint for real-time voice interaction.

Hume provides SDKs for TypeScript, Python, and .NET.

The connection supports mid-session voice switching.

✓ Checkpoint: Your WebSocket connection should be active with audio streaming.

Step 3: Test the Conversation Flow

Speak into your microphone and listen to the AI respond.

The voice adapts to your emotional tone in real time.

Response latency is under 200 milliseconds with Octave 2.

✅ Result: You’ve built a real-time conversational voice experience with emotional awareness.

💡 Pro Tip: Use mid-session voice switching to change characters during a conversation without reconnecting the WebSocket.

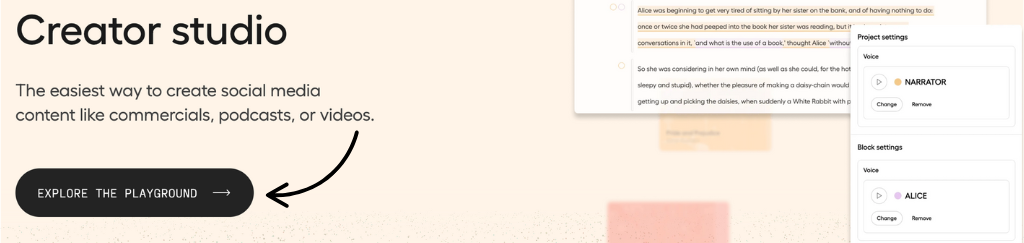

How to Use Hume AI TTS Creator Studio

TTS Creator Studio lets you create long-form audio projects with multiple characters and scenes.

Here’s how to use it step by step.

See TTS Creator Studio in action:

Now let’s break down each step.

Step 1: Create a New Project

Click “Projects” in the sidebar and select “New Project.”

Name your project and choose the content type.

Step 2: Assign Voices to Characters

Use the Script Editor to add dialogue lines.

Assign a different voice to each character in your script.

Octave keeps each voice consistent across the entire project.

✓ Checkpoint: Each character should have a unique voice assigned with dialogue lines ready.

Step 3: Generate and Export Audio

Click “Generate All” to create audio for the full script.

The platform chunks long text automatically.

Export the final audio when you’re satisfied.

✅ Result: You’ve produced a multi-character audio project with consistent voices throughout.

💡 Pro Tip: For audiobooks, add emotional direction per line like “whisper this secretly” to bring scenes to life.

How to Use Hume AI Custom Voice Persona

Custom Voice Persona lets you build unique AI voices from text prompts or audio recordings as short as 5 seconds.

Here’s how to use it step by step.

Step 1: Choose Your Creation Method

Go to “Voices” and click “Create Voice.”

Choose between text prompt or audio clone.

Step 2: Design or Clone Your Voice

For text prompts, describe the voice in detail.

Try something like “a warm 40-year-old British man, calm and thoughtful.”

For cloning, upload a clean audio recording of at least 5 seconds.

✓ Checkpoint: Your voice persona should appear in the voice library.

Step 3: Test and Save Your Voice

Type a sample sentence and click “Generate” to preview.

Adjust the description until the voice matches your vision.

Save the voice to use across all your projects.

✅ Result: You’ve created a reusable custom voice persona for all your projects.

💡 Pro Tip: Include personality traits in your voice description, not just physical characteristics. “Sarcastic and witty” produces very different results than “cheerful and encouraging.”

How to Use Hume AI Multimodal Analysis

Multimodal Analysis lets you analyze emotions across audio, video, and text simultaneously.

Here’s how to use it step by step.

Step 1: Select Your Input Sources

Choose which modalities to analyze: voice, face, or language.

You can combine multiple sources for deeper insights.

Step 2: Upload Your Media Files

Upload video files that contain both audio and visual data.

The API processes facial expressions, vocal tones, and spoken words together.

✓ Checkpoint: Your files should be uploaded with all selected modalities active.

Step 3: Review Combined Emotion Data

View the unified emotion timeline across all input sources.

Compare how facial expressions match vocal emotion cues.

Export the data for use in your own applications.

✅ Result: You’ve performed a full multimodal emotion analysis combining voice, face, and text data.

💡 Pro Tip: Multimodal analysis catches emotions that single-source analysis misses. A calm voice paired with a tense facial expression reveals stress better than audio alone.

Hume AI Pro Tips and Shortcuts

After testing Hume AI for over 6 months, here are my best tips.

Keyboard Shortcuts

| Action | Shortcut |

|---|---|

| Generate audio | Ctrl + Enter |

| Play/Pause preview | Spacebar |

| Switch between voices | Ctrl + Shift + V |

| Open voice library | Ctrl + L |

Hidden Features Most People Miss

- Voice Conversion API: Swap one voice for another while keeping exact timing and phonetics — perfect for dubbing without re-recording.

- Phoneme Editing: Adjust pronunciation at the phoneme level to fix custom names or add specific word emphasis.

- Cross-Language Accent Prediction: Clone a voice in one language and Octave 2 predicts the natural accent when speaking another language.

Hume AI Common Mistakes to Avoid

Mistake #1: Using Generic Voice Descriptions

❌ Wrong: Typing “male voice” or “female voice” and expecting great results.

✅ Right: Use detailed descriptions like “a confident 35-year-old American woman speaking warmly.”

Mistake #2: Ignoring Overage Costs

❌ Wrong: Running high-volume generation without checking your usage limits.

✅ Right: Monitor usage in the billing dashboard and upgrade your plan before hitting overage charges.

Mistake #3: Using the Free Plan for Commercial Projects

❌ Wrong: Publishing audio made on the free tier in commercial content.

✅ Right: Upgrade to at least the Starter plan ($3/month) to get commercial licensing rights.

Hume AI Troubleshooting

Problem: Audio Generation Sounds Flat or Robotic

Cause: Your text input lacks emotional context for Octave to interpret.

Fix: Add emotional descriptions in your prompt like “speak with warmth and urgency.” Also try adding punctuation and natural pauses in your text.

Problem: Voice Clone Doesn’t Sound Like the Original

Cause: The source audio recording has background noise or is too short.

Fix: Use a clean recording of at least 15 seconds for best results. Remove background music or noise before uploading.

Problem: API Key Returns “Unauthorized” Error

Cause: Your API key is expired, invalid, or your account has no credits remaining.

Fix: Generate a new API key from the Settings page. Check your billing dashboard to make sure your account has active credits.

📌 Note: If none of these fix your issue, contact Hume AI support at billing@hume.ai.

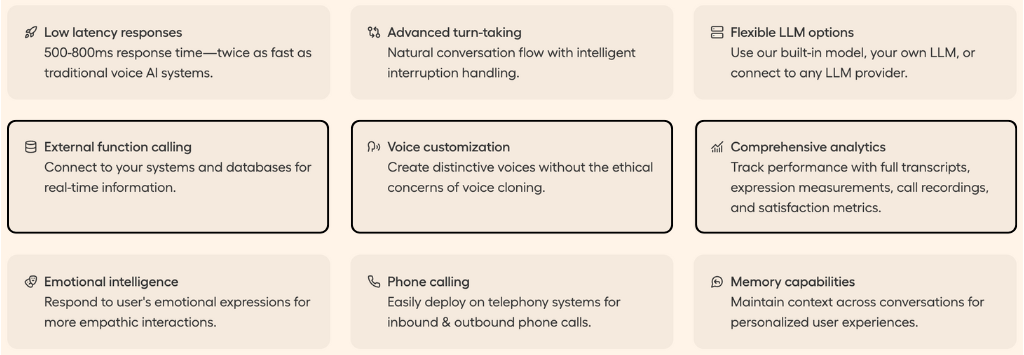

What is Hume AI?

Hume AI is a voice AI and emotion detection platform that generates expressive speech and analyzes human emotions.

Think of it like a voice actor that never gets tired — one that actually understands the emotion behind every word it speaks.

Watch this quick overview:

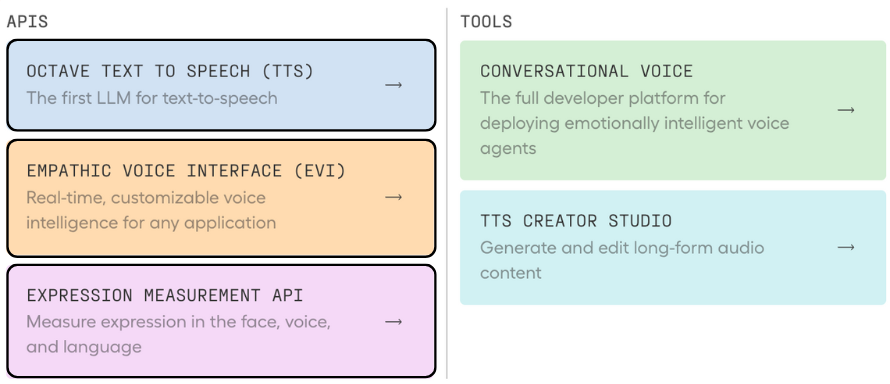

It includes these key features:

- Octave TTS: First text-to-speech model built on LLM intelligence for emotion-aware speech generation in 11 languages.

- Empathetic Voice Interface (EVI): Real-time conversational AI that detects and responds to user emotions.

- Expression Measurement API: Detects 25+ emotions from voice, facial expressions, and text.

- Conversational Voice: Low-latency voice interactions for apps, games, and virtual assistants.

- TTS Creator Studio: Multi-character audio production with script editor and voice assignment.

- Custom Voice Persona: Create unique voices from text prompts or audio recordings as short as 5 seconds.

- Multimodal Analysis: Combined emotion analysis across audio, video, and text inputs.

For a full review, see our Hume AI review.

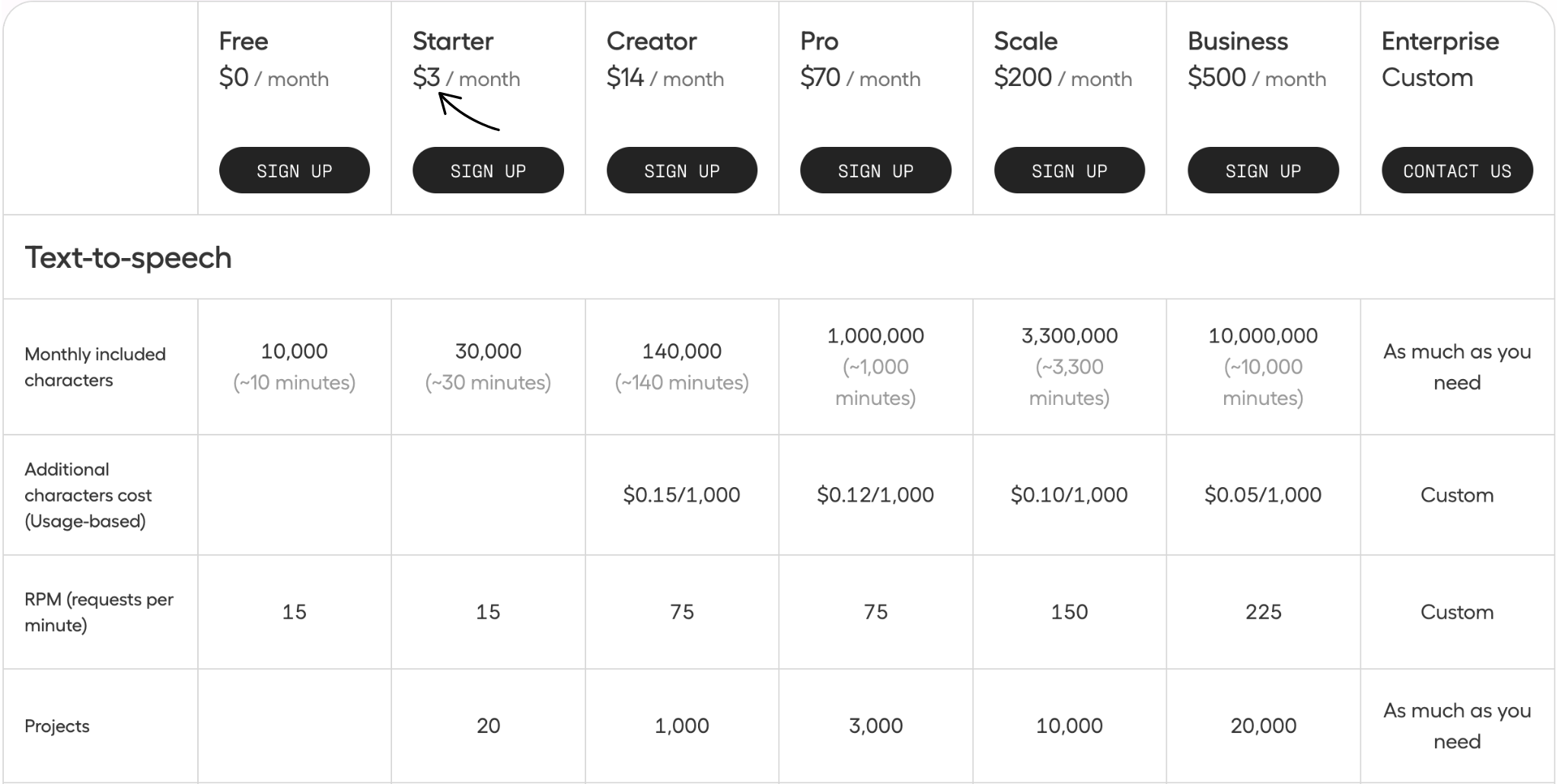

Hume AI Pricing

Here’s what Hume AI costs in 2026:

| Plan | Price | Best For |

|---|---|---|

| Free | $0 | Testing features with 10,000 characters/month |

| Starter | $3 | Hobbyists who need commercial licensing |

| Creator | $14 | Content creators with unlimited voice cloning |

| Pro | $70 | Professional studios and agencies |

| Scale | $200 | High-volume production teams |

| Business | $500 | Enterprise teams with advanced needs |

| Enterprise | Contact Sales | Custom deployments with dedicated support |

Free trial: Yes — free tier includes 10,000 TTS characters and 5 EVI minutes per month.

Money-back guarantee: No formal guarantee, but you can cancel anytime.

💰 Best Value: Creator ($14/month) — includes unlimited voice cloning, commercial license, and 140,000 characters per month.

Hume AI vs Alternatives

How does Hume AI compare? Here’s the competitive landscape:

| Tool | Best For | Price | Rating |

|---|---|---|---|

| Hume AI | Emotional voice AI | $0–$500/mo | ⭐ 4.2 |

| ElevenLabs | Top voice quality (4.7 MOS) | $0–$82.50/mo | ⭐ 4.7 |

| Murf AI | Enterprise video workflows | $19–$199/mo | ⭐ 4.3 |

| Speechify | Personal text-to-speech | $0–$29/mo | ⭐ 4.2 |

| Descript | All-in-one audio/video editing | $0–$50/mo | ⭐ 4.5 |

| Play ht | Conversational AI voices | $0–$49/mo | ⭐ 4.1 |

| Lovo AI | Multilingual voice content | $24–$75/mo | ⭐ 4.0 |

| TTSOpenAI | Developer API integration | Pay per use | ⭐ 4.3 |

Quick picks:

- Best overall: ElevenLabs — highest voice quality scores and fastest generation at 75ms

- Best budget: Hume AI — free tier plus $3/month starter with commercial license

- Best for beginners: Speechify — simple interface with no technical setup needed

- Best for emotional AI: Hume AI — only voice platform with built-in emotion detection and empathetic responses

🎯 Hume AI Alternatives

Looking for Hume AI alternatives? Here are the top options:

- 🚀 TTSOpenAI: Developer-friendly pay-as-you-go TTS API powered by OpenAI’s voice models with fast integration.

- 🎨 Murf AI: Professional voiceover studio with 200+ voices, 30+ languages, and a built-in video editor for teams.

- 👶 Speechify: Beginner-friendly text-to-speech app that reads any text aloud with natural voices on any device.

- ⚡ Descript: All-in-one audio and video editor with AI voice cloning, transcription, and podcast editing tools.

- 🌟 ElevenLabs: Industry-leading voice quality with 32 languages, fastest generation speed, and extensive voice library.

- 💰 Play ht: Affordable AI voice platform with conversational voice models and an easy-to-use API for developers.

- 🧠 Lovo AI: AI voice generator with 500+ voices in 100+ languages and built-in video creation features.

- 🎯 Listnr: Text-to-speech tool focused on podcast creators with audio embedding and distribution features.

- 🔧 Podcastle: Podcast recording and editing platform with AI voice generation and background noise removal.

- 💼 DupDub: Budget-friendly AI voiceover tool with 300+ voices for social media and marketing videos.

- 🏢 WellSaid Labs: Enterprise-grade voice platform with brand-consistent voices for corporate training and marketing.

- 📊 Revoicer: One-click AI voiceover generator with 100+ voices focused on simple, fast audio creation.

- 🔒 ReadSpeaker: Enterprise TTS provider with custom pricing, used by education and accessibility organizations.

- ⭐ NaturalReader: Personal and professional text-to-speech with document upload and Chrome extension support.

- 🔥 Altered: Voice transformation platform that changes your voice in real-time for creative and professional use.

- 🎨 Speechelo: One-time purchase voiceover tool that converts text to natural speech with emotion controls.

For the full list, see our Hume AI alternatives guide.

⚔️ Hume AI Compared

Here’s how Hume AI stacks up against each competitor:

- Hume AI vs TTSOpenAI: Hume AI wins on emotion control and voice design. TTSOpenAI wins on simple API pricing and developer speed.

- Hume AI vs Murf AI: Murf AI wins for enterprise video workflows. Hume AI wins for emotional voice generation and custom personas.

- Hume AI vs Speechify: Speechify wins for casual personal use. Hume AI wins for developers building emotion-aware voice apps.

- Hume AI vs Descript: Descript wins as an all-in-one editor. Hume AI wins for dedicated voice AI with emotional depth.

- Hume AI vs ElevenLabs: ElevenLabs wins on raw voice quality and speed. Hume AI wins on emotional understanding and empathetic voice features.

- Hume AI vs Play ht: Play ht wins on conversational voice pricing. Hume AI wins on emotion detection and multimodal analysis.

- Hume AI vs Lovo AI: Lovo AI wins on language variety. Hume AI wins on voice expressiveness and emotional tone control.

- Hume AI vs Listnr: Listnr wins for podcast distribution. Hume AI wins for voice quality and emotion-driven speech.

- Hume AI vs Podcastle: Podcastle wins for podcast editing. Hume AI wins for expressive voice generation and API access.

- Hume AI vs DupDub: DupDub wins on budget pricing. Hume AI wins on voice realism and emotional range.

- Hume AI vs WellSaid Labs: WellSaid Labs wins for enterprise consistency. Hume AI wins for emotional expression and voice cloning.

- Hume AI vs Revoicer: Revoicer wins for quick one-click voiceovers. Hume AI wins for nuanced emotional delivery.

- Hume AI vs ReadSpeaker: ReadSpeaker wins for accessibility and education. Hume AI wins for creative voice design and API power.

- Hume AI vs NaturalReader: NaturalReader wins for simple document reading. Hume AI wins for expressive content creation.

- Hume AI vs Altered: Altered wins for real-time voice changing. Hume AI wins for text-to-speech quality and emotion AI.

- Hume AI vs Speechelo: Speechelo wins on one-time pricing. Hume AI wins on every quality and feature metric.

Start Using Hume AI Now

You learned how to use every major Hume AI feature:

- ✅ Octave TTS

- ✅ Empathetic Voice Interface (EVI)

- ✅ Expression Measurement API

- ✅ Conversational Voice

- ✅ TTS Creator Studio

- ✅ Custom Voice Persona

- ✅ Multimodal Analysis

Next step: Pick one feature and try it now.

Most people start with Octave TTS.

It takes less than 5 minutes.

Frequently Asked Questions

How to use Hume text to speech?

Sign up for a free Hume AI account at app.hume.ai. Open the TTS playground, choose a voice or create one from a text prompt, type your text, and click “Generate.” You can add emotional direction like “speak warmly” to control the tone. Download the audio file when finished.

What is Hume AI used for?

Hume AI is used for generating expressive AI voices, building empathetic voice agents, and detecting emotions from audio, video, and text. Common use cases include audiobook narration, podcast voiceovers, customer service agents, video game characters, and emotional analysis for research.

How much does Hume AI cost?

Hume AI offers a free plan with 10,000 characters per month. Paid plans start at $3/month (Starter), $14/month (Creator), $70/month (Pro), $200/month (Scale), and $500/month (Business). Enterprise plans have custom pricing. All paid plans include commercial licensing.

Is Hume AI safe?

Yes, Hume AI is a legitimate company backed by significant venture funding. It was founded in 2021 by Alan Cowen, a former Google researcher. The platform includes ethical safeguards for voice cloning, and enterprise plans offer SOC 2, GDPR, and HIPAA compliance features.

What is the difference between Hume and ElevenLabs?

ElevenLabs focuses on raw voice quality and speed with the fastest generation (75ms) across 32 languages. Hume AI focuses on emotional understanding — its Octave model interprets context and emotions to deliver nuanced speech. Choose ElevenLabs for pure voice quality, Hume AI for emotion-aware voice applications.