Are you struggling with the ever-increasing demands of AI and machine learning workloads?

Do you find yourself limited by your local hardware?

The frustration is real. This is where RunPod steps in.

In this RunPod review, we’ll examine whether it’s truly the best GPU cloud solution for supercharging your AI endeavors.

Ready to experience the power of scalable GPUs? Over 10,000 users have already chosen RunPod for their AI/ML needs, launching over 500,000 instances.

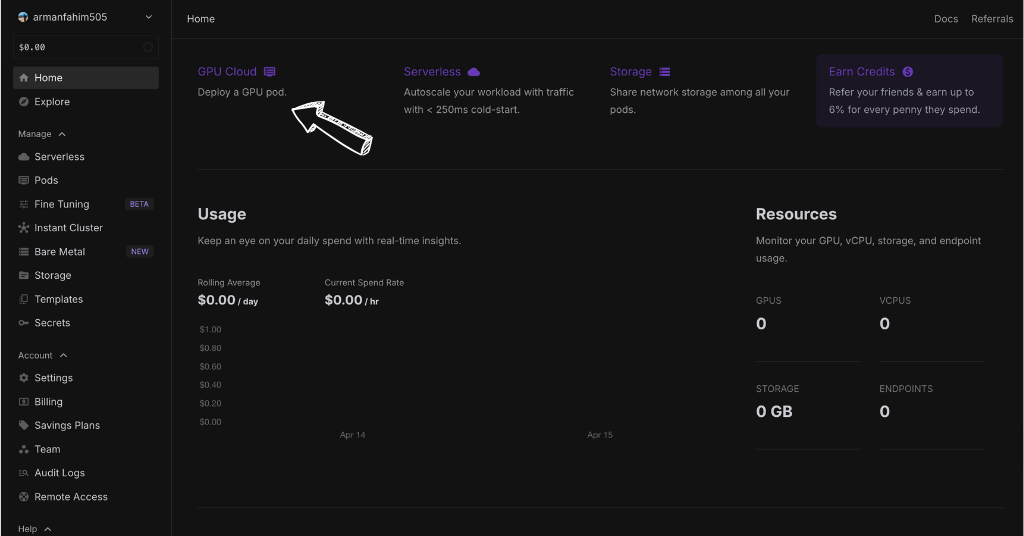

What is RunPod?

Think of RunPod as a place where you can easily create powerful computers in the cloud.

These computers have special parts called GPUs that are super good at doing math for things like AI.

You don’t have to worry about setting up or managing servers.

You pick what you need, like a pod with the right amount of power.

Then, you can deploy your AI projects on this pod very quickly.

RunPod also helps you create an endpoint.

Which is like a web address that lets other programs or people use your AI models easily.

So, RunPod makes it simple to use powerful computers for your AI projects without all the complicated techy stuff.

Who Created RunPod

RunPod was created by Zhen Lu, who is the current CEO.

The company was founded in 2022.

The idea for RunPod came from the need for easier access to powerful computers for AI work.

Zhen and his team wanted to create an easy way for people to rent powerful computers for their needs without the hardware hassle.

Their vision was to make powerful computing resources available to everyone.

Helping them deploy and scale their AI projects affordably.

Top Benefits of RunPod

- Easy Serverless Deployment: Run your AI applications without managing any servers. RunPod’s serverless architecture handles the backend so you can focus on your code.

- Pre-built Templates: Get started quickly with ready-to-use template images for popular ML frameworks and tools, saving you setup time.

- Scalable Inference: Easily scale your inference workloads as demand grows, ensuring your applications remain responsive even during peak usage.

- Flexible Network Volume: With adaptable network volume options, you can choose the right amount of storage for your data center and project needs.

- Global Data Center Locations: Access powerful cloud computing resources from various data center locations, reducing latency and improving performance.

- Detailed Logging: Keep track of your application’s activity and troubleshoot issues effectively with comprehensive log files.

- Cost-Effective Startup Solution: Startup companies and individual developers can access powerful GPUs at competitive prices, democratizing AI development.

- Scalable Enterprise Solutions: Enterprise clients can leverage RunPod’s infrastructure for large-scale ML deployments and demanding workloads.

- Seamless Docker Integration: Easily deploy your containerized applications using Docker, ensuring portability and consistent environments.

Best Features

RunPod has some really cool tools that make working with AI much simpler and faster.

These features help you use powerful computers in the cloud without a lot of fuss.

Let’s look at some of the best things RunPod offers:

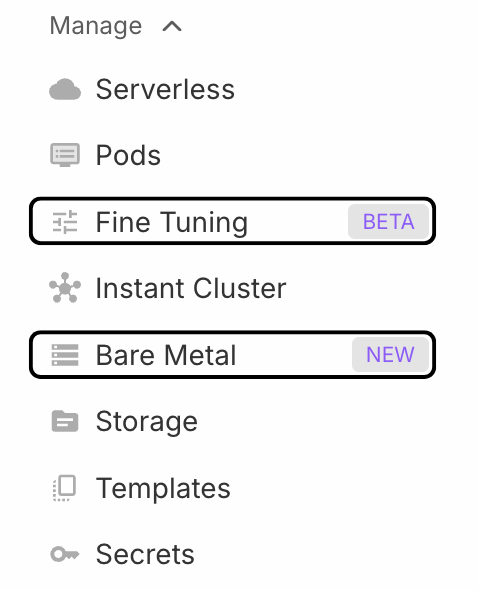

1. Pods

With RunPod, you can rent a pod, which is like your own super-fast computer in the cloud.

You can pick the type of GPU that you need for your project.

This means you only pay for the power you actually use.

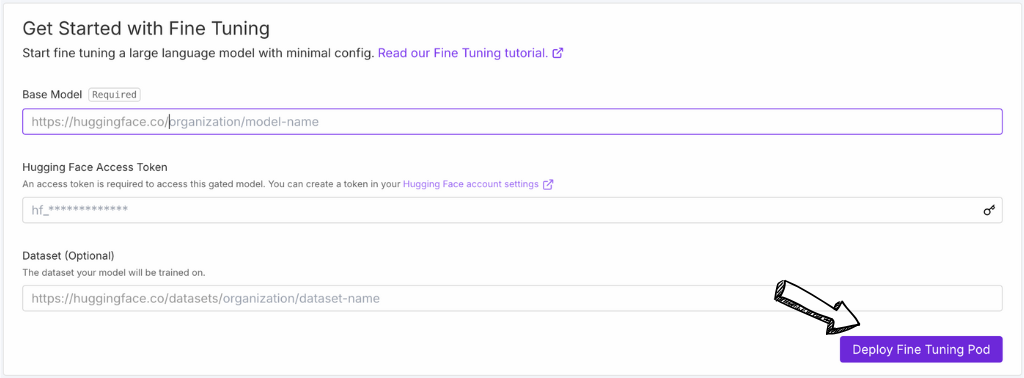

2. Fine-Tuner

The Fine-tuner feature makes it easier to teach AI models new things.

Instead of starting from scratch, you can take an existing open-source model and train it with your data.

RunPod gives you the tools to do this efficiently, saving you time and effort.

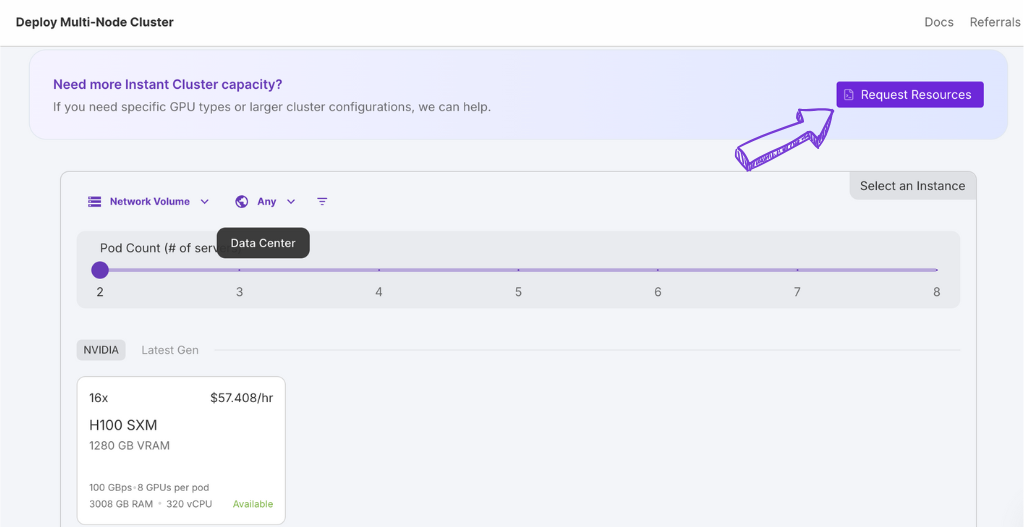

3. Instant Clusters

Need even more power?

Instant Cluster lets you quickly connect many pod computers.

It’s like having your mini supercomputer ready in seconds on this cloud platform.

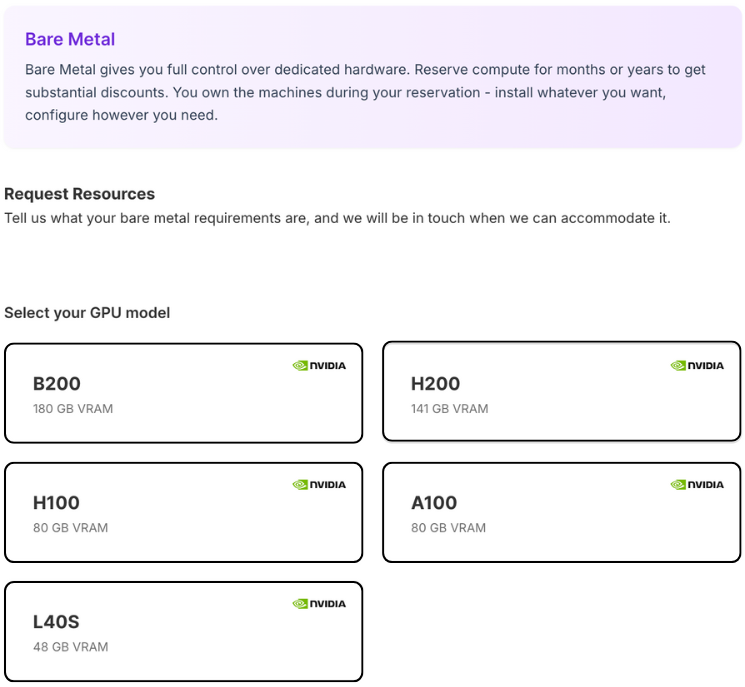

4. Bare Metal

For those who want full control,.

Bare Metal gives you direct access to the computer hardware.

This means you have complete power over everything.

Just like having your physical server in a data center but without the setup hassle.

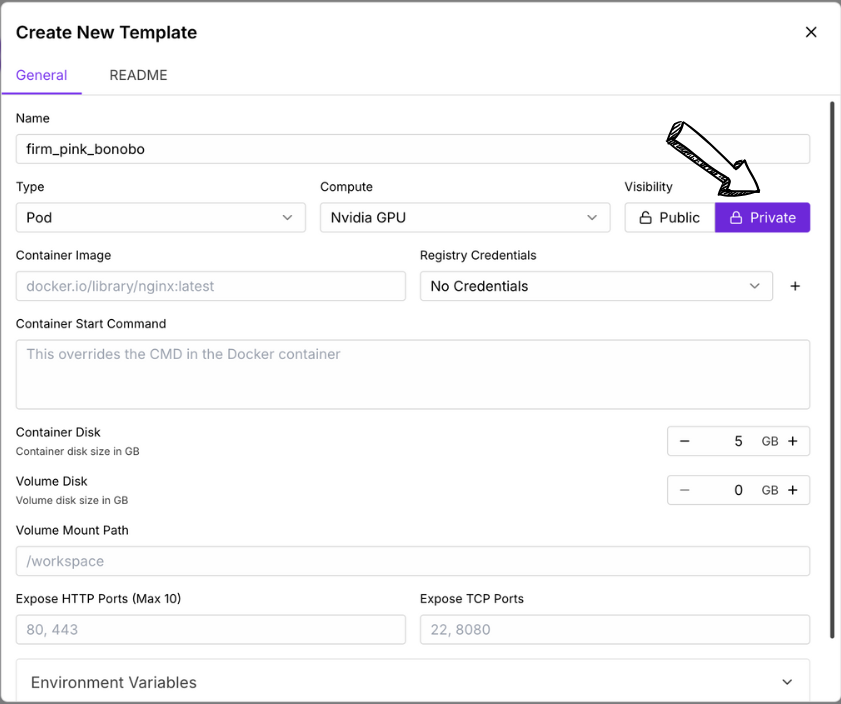

5. Template Maker

Want to save your favorite setups?

Template Maker lets you create your custom template images.

This means you can save all your software and settings, so next time you need a similar setup,

it’s ready to go in a snap.

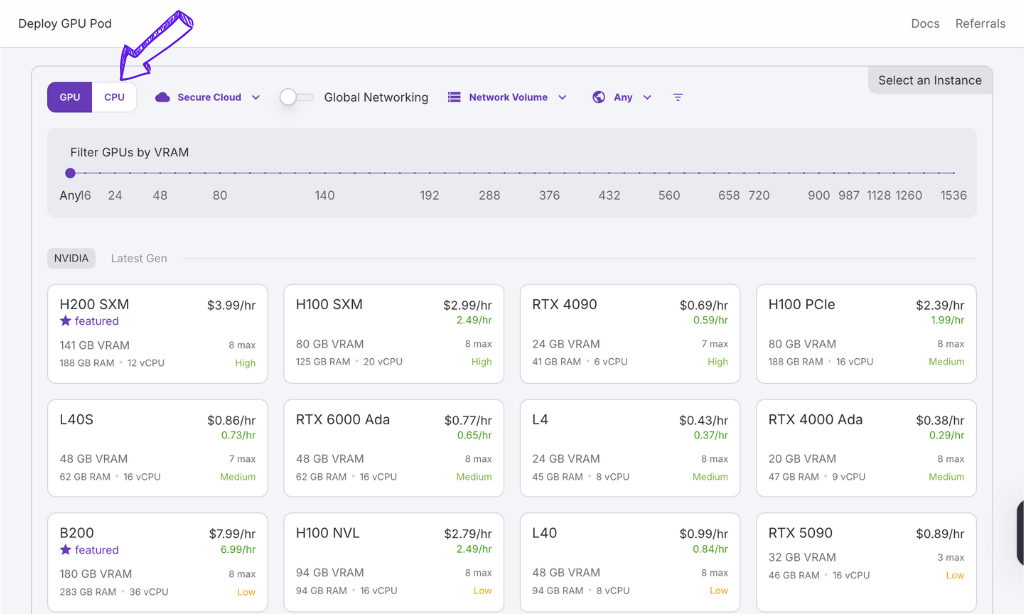

Pricing

| Plan Name | Starting at |

| H100 NVL | $2.59 |

| H200 SXM | $3.59 |

| H100 PCle | $1.99 |

| H100 SXM | $2.69 |

| A100 PCle | $1.19 |

| A100 SXM | $1.89 |

Pros and Cons

Pros

Cons

Alternatives of RunPod

While RunPod offers compelling features for GPU cloud computing, several other platforms cater to similar needs.

Here are a few alternatives to consider:

- Amazon EC2 (with P instances) is a widely used cloud platform offering a wide range of GPU instances. It boasts a mature ecosystem and extensive services but can be more complex and potentially pricier for smaller startups.

- Google Cloud Platform (GCP – Compute Engine with A100/H100): Another major cloud computing provider with powerful GPU options and strong integration with its ml and ai inference services. Similar to AWS, it offers a broad suite of tools.

- Microsoft Azure (NV-series VMs): Microsoft’s cloud platform provides various GPU virtual machines optimized for compute-intensive tasks. It integrates well with other Microsoft products and services.

- Lambda Labs GPU Cloud specializes in GPU cloud computing. It often offers competitive pricing and focuses on deep learning workloads. It offers both on-demand and reserved instances.

- Paperspace: This offers a user-friendly interface and various GPU options, including pay-as-you-go and dedicated instances. It’s known for its ease of use, particularly for ML development and deployment.

- CoreWeave: Focuses on high-performance computing and offers powerful GPU instances optimized for demanding enterprise and research workloads. They often highlight cost-effectiveness for large-scale inference and training.

Evaluating your specific needs and comparing them agAInst these platforms will help you choose the best cloud platform for your AI projects.

Personal Experience with RunPod

Our team recently needed a robust and cost-effective solution for deploying our latest AI model.

After exploring several options, we decided to try RunPod.

Our experience was quite positive, particularly with the following aspects:

- Effortless Serverless Endpoint Deployment: Setting up a serverless endpoint for our model was surprisingly straightforward. We could quickly deploy our trained model and make it accessible via a simple API call.

- Seamless Docker Integration: Our model was already containerized using Docker, and RunPod integrated perfectly with this. Deploying our Docker image was a breeze.

- Cost Savings: Compared to some of the larger cloud platform options we considered, RunPod offered significant cost savings for the GPU resources we needed.

- Easy Access to Powerful GPUs: We were able to choose a pod with the specific GPU that matched our inference requirements, ensuring optimal performance without overspending.

- Simplified Repository Management: Integrating our model from our repository (a private Gitlab instance) was well-documented and easy to set up.

Overall, RunPod provided us with a flexible, affordable.

And an efficient way to deploy our AI model using a serverless endpoint.

They leveraged the power of their GPUs and simplified the deployment process from our existing repository.

Final Thoughts

So, is RunPod the right choice for you?

If you need powerful computers in the cloud for AI without spending a fortune.

It’s definitely worth checking out.

It makes it easy to get started with pre-built templates and serverless options.

You can pick the exact power you need and even rent super-fast GPUs.

If you want a straightforward way to deploy your AI models and need serious computing power, RunPod offers a strong option.

Ready to see how much you can save and how quickly you can get started?

Visit RunPod today and explore their GPU options!

Frequently Asked Questions

What exactly is RunPod?

RunPod lets you rent powerful computers with GPUs in the cloud. It makes it easy to run AI and machine learning tasks without needing your own expensive hardware. You can deploy applications using serverless options or rent entire pods.

How much does a RunPod cost?

RunPod’s pricing depends on the type of GPU and how long you use it. The Community Cloud is often cheaper, while the Secure Cloud offers more stable performance. Check their website for the latest pricing details.

Is RunPod easy to use?

Yes, RunPod is designed to be user-friendly, especially with its pre-built templates and serverless options. However, some features like Bare Metal might require more technical knowledge.

What kind of GPUs does RunPod offer?

RunPod offers a variety of GPUs, from more affordable options to high-end cards like A100 and H100, suitable for different AI inference and ML workloads.

Can I use Docker with RunPod?

Yes, RunPod has seamless Docker integration, which makes it easy to deploy containerized applications on its platform.